[NOTE: At the end of editing this, I found that the substitution used below is famous enough to have a name, and for Spivak to have called it the “world’s sneakiest substitution”. Glad I’m not the only one who thought so.]

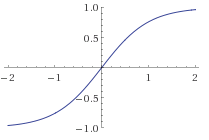

In the course of working through some (very good) material on neural networks (which I may try to work through here later), I noticed that it was beneficial for a so-called “activation function” to be able to be written as the solution of an “easy” differential equation. Here by “easy” I mean something closer to “short to write” than “easy to solve”.

In particular, two often used activation functions areand

One might observe that these satisfy the equations

and

By invoking some theorems of Picard, Lindelof, Cauchy and Lipschitz (I was only going to credit Picard until wikipedia set me right), we recall that we could start from these (separable) differential equations and fix a single point to guarantee we would end up at the functions above. In seeking to solve the second, I found after substituting cos(u) =τ that

and shortly after that, I realized I had no idea how to integrate csc(u). Obviously the internet knows (substitute v = cot(u) + csc(u) to get the integral being –log(cot(u)+csc(u))), which is a really terrible answer, since I would never have gotten there myself.

Instinctually, I might have tried the approach to the right, which gets you back to where we started, or by changing the numerator to cos2x+sin2x, which leads to some amount of trouble, though intuitively, this feels like the right way to do it. Indeed, eventually this might lead you to using half angles (and avoiding integrals of inverse trig functions). We find

Avoiding the overwhelming temptation to split this integral into summands (which would leave us with a cot(u)), we instead divide the numerator and denominator by sin2(u) to find

Now substituting v = tan(u/2), we find that dv = 1/2 (1+tan2(u/2))du = 1/2(1+v2)du, so making this substitution, and then undoing all our old substitutions:

Using the half angle formulae that everyone of course remembers and dropping the C (remember, there’s already a constant on the other side of this equation), this simplifies to (finally)

Subbing back in and solving for

gives, as desired,

.

Phew.

![The [activation] function sigma.](https://colindcarroll.wordpress.com/wp-content/uploads/2012/12/logistic.gif?w=640)

![[UNSET] (13)](https://colindcarroll.wordpress.com/wp-content/uploads/2012/03/unset-13.jpg?w=640&h=480)

![[UNSET] (1)](https://colindcarroll.wordpress.com/wp-content/uploads/2012/02/unset-1.jpg?w=300&h=225)

![[UNSET] (2)](https://colindcarroll.wordpress.com/wp-content/uploads/2012/02/unset-2.jpg?w=300&h=225)

![[UNSET]](https://colindcarroll.wordpress.com/wp-content/uploads/2012/02/unset.jpg?w=300&h=225)